The Problem

Like many other self-styled thinky programmer guys, I like to imagine myself as

a sort of Holmesian genius,

making trenchant observations, collecting them, and then synergizing them into

brilliant deductions with the keen application of my powerful mind.

However, several years ago, I had an epiphany in my self-concept. I finally

understood that, to the extent that I am usefully clever, it is less in a

Holmesian idiom, and more, shall we say,

Monkesque.

For those unfamiliar with either of the respective franchises:

- Holmes is a towering intellect honed by years of training, who catalogues

intentional, systematic observations and deduces logical, factual conclusions

from those observations.

- Monk, on the other hand, while also a reasonably intelligent guy, is highly

neurotic, wracked by unresolved trauma and profound grief. As both a

consulting job and a coping mechanism, he makes a habit of erratically

wandering into crime scenes, and, driven by a carefully managed jenga tower

of mental illnesses, leverages his dual inabilities to solve crimes. First,

he is unable to filter out apparently inconsequential details, building up a

mental rat’s nest of trivia about the problem; second, he is unable to let go

of any minor incongruity, obsessively ruminating on the collection of facts

until they all make sense in a consistent timeline.

Perhaps surprisingly, this tendency serves both this fictional wretch of a

detective, and myself, reasonably well. I find annoying incongruities in

abstractions and I fidget and fiddle with them until I end up building

something that a lot of people like, or perhaps

something that a smaller number of people get really excited

about. At worst, at least I

eventually understand what’s going

on. This is a self-soothing

activity but it turns out that, managed properly, it can very effectively

soothe others as well.

All that brings us to today’s topic, which is an incongruity I cannot smooth

out or fit into a logical framework to make sense. I am, somewhat reluctantly,

a genAI

skeptic. However, I am, even more

reluctantly, exposed to genAI Discourse every damn minute of every damn day.

It is relentless, inescapable, and exhausting.

This preamble about personality should hopefully help you, dear reader, to

understand how I usually address problematical ideas by thinking and thinking

and fidgeting with them until I manage to write some words — or perhaps a new

open source package — that logically orders the ideas around it in a way which

allows my brain to calm down and let it go, and how that process is important

to me.

In this particular instance, however, genAI has defeated me. I cannot make it

make sense, but I need to stop thinking about it anyway. It is too much and I

need to give up.

My goal with this post is not to convince anyone of anything in particular —

and we’ll get to why that is a bit later — but rather:

- to set out my current understanding in one place, including all the various

negative feelings which are still bothering me, so I can stop repeating it

elsewhere,

- to explain why I cannot build a case that I think should be particularly

convincing to anyone else, particularly to someone who actively disagrees

with me,

- in so doing, to illustrate why I think the discourse is so fractious and

unresolvable, and finally

- to give myself, and hopefully by proxy to give others in the same situation,

permission to just peace out of this nightmare quagmire corner of the

noosphere.

But first, just because I can’t prove that my interlocutors are Wrong On The

Internet, doesn’t mean I won’t explain why I feel

like they are wrong.

The Anti-Antis

Most recently, at time of writing, there have been a spate of “the genAI

discourse is bad” articles, almost exclusively written from the perspective of,

not boosters exactly, but pragmatically minded (albeit concerned) genAI

users, wishing for the skeptics to be more pointed and accurate in our

critiques. This is anti-anti-genAI content.

I am not going to link to any of these, because, as part of their

self-fulfilling prophecy about the “genAI discourse”, they’re also all bad.

Mostly, however, they had very little worthwhile to respond to because they

were straw-manning their erstwhile interlocutors. They are all getting annoyed

at “bad genAI criticism” while failing to engage with — and often failing to

even mention — most of the actual substance of any serious genAI

criticism. At least, any of the criticism that I’ve personally read.

I understand wanting to avoid a callout or Gish-gallop culture and just express

your own ideas. So, I understand that they didn’t link directly to particular

sources or go point-by-point on anyone else’s writing. Obviously I get it,

since that’s exactly what this post is doing too.

But if you’re going to talk about how bad the genAI conversation is, without

even mentioning huge categories of problem like “climate impact” or

“disinformation” even once, I honestly don’t know what conversation you’re

even talking about. This is peak “make up a guy to get mad at” behavior, which

is especially confusing in this circumstance, because there’s an absolutely

huge crowd of actual people that you could already be mad at.

The people writing these pieces have historically seemed very thoughtful to me.

Some of them I know personally. It is worrying to me that their critical

thinking skills appear to have substantially degraded specifically after

spending a bunch of time intensely using this technology which I believe has a

scary risk of degrading one’s critical thinking

skills.

Correlation is not causation or whatever, and sure, from a rhetorical

perspective this is “post hoc ergo propter hoc” and maybe a little “ad hominem”

for good measure, but correlation can still be concerning.

Yet, I cannot effectively respond to these folks, because they are making a

practical argument that I cannot, despite my best efforts, find compelling

evidence to refute categorically. My experiences of genAI are all extremely

bad, but that is barely even anecdata. Their experiences are

neutral-to-positive. Little scientific data exists. How to resolve this?

The Aesthetics

As I begin to state my own position, let me lead with this: my factual

analysis of genAI is hopelessly negatively biased. I find the vast majority of

the aesthetic properties of genAI to be intensely unpleasant.

I have been trying very hard to correct for this bias, to try to pay

attention to the facts and to have a clear-eyed view of these systems’

capabilities. But the feelings are visceral, and the effort to compensate is

tiring. It is, in fact, the desire to stop making this particular kind of

effort that has me writing up this piece and trying to take an intentional

break from the subject, despite its intense relevance.

When I say its “aesthetic qualities” are unpleasant, I don’t just mean the

aesthetic elements of output of genAIs themselves. The aesthetic quality of

genAI writing, visual design, animation and so on, while mostly atrocious, is

also highly variable. There are cherry-picked examples which look… fine.

Maybe even good. For years now, there have been, famously, literally

award-winning aesthetic outputs of genAI.

While I am ideologically predisposed to see any “good” genAI art as accruing

the benefits of either a survivorship bias from thousands of terrible outputs

or simple plagiarism rather than its own inherent quality, I cannot deny that

in many cases it is “good”.

However, I am not just talking about the product, but the process; the

aesthetic experience of interfacing with the genAI system itself, rather than

the aesthetic experience of the outputs of that system.

I am not a visual artist and I am not really a writer, particularly not a

writer of fiction or anything else whose experience is primarily aesthetic. So

I will speak directly to the experience of software development.

I have seen very few successful examples of using genAI to produce whole,

working systems. There are no shortage of highly public miserable

failures, particularly

from the vendors of these systems

themselves,

where the outputs are confused, self-contradictory, full of subtle errors and

generally unusable. While few studies exist, it sure looks like this is an

automated way of producing a Net Negative Productivity

Programmer, throwing out

chaff to slow down the rest of the team.

Juxtapose this with my aforementioned psychological motivations, to wit, I want

to have everything in the computer be orderly and make sense, I’m sure most

of you would have no trouble imagining that sitting through this sort of

practice would make me extremely unhappy.

Despite this plethora of negative experiences, executives are aggressively

mandating the use of AI. It looks like without such mandates, most

people will not bother to use such tools, so the executives will need muscular

policies to enforce its use.

Being forced to sit and argue with a robot while it struggles and fails to

produce a working output, while you have to rewrite the code at the end anyway,

is incredibly demoralizing. This is the kind of activity that activates every

single major cause of

burnout at

once.

But, at least in that scenario, the thing ultimately doesn’t work, so there’s

a hope that after a very stressful six month pilot program, you can go to

management with a pile of meticulously collected evidence, and shut the whole

thing down.

I am inclined to believe that, in fact, it doesn’t work well enough to be used

this way, and that we are going to see a big crash. But that is not the most

aesthetically distressing thing. The most distressing thing is that maybe it

does work; if not well enough to actually do the work, at least ambiguously

enough to fool the executives long-term.

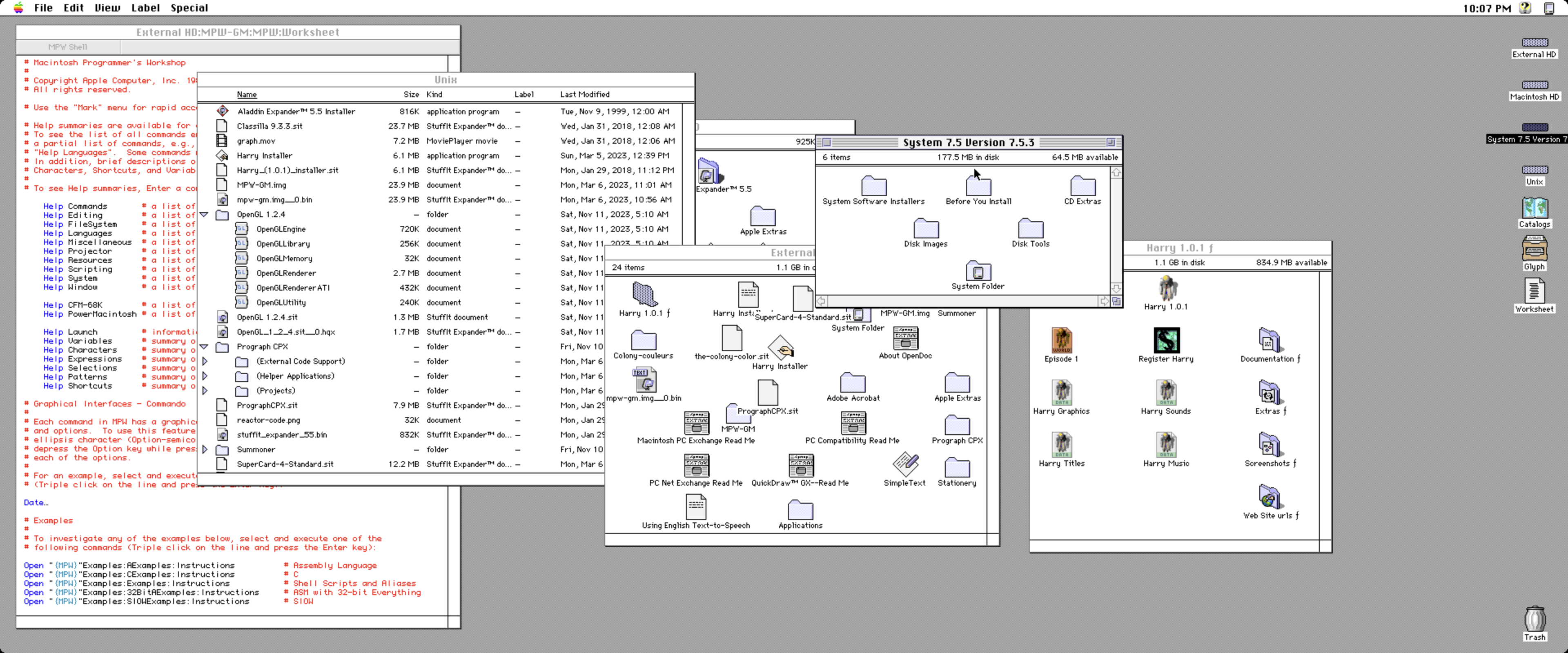

This

project,

in particular, stood out to me as an example. Its author, a self-professed “AI

skeptic” who “thought LLMs were glorified Markov chain generators that didn’t

actually understand code and couldn’t produce anything novel”, did a

green-field project to test this hypothesis.

Now, this particular project is not totally inconsistent with a world in

which LLMs cannot produce anything novel. One could imagine that, out in the

world of open source, perhaps there is enough “OAuth provider written in

TypeScript” blended up into the slurry of “borrowed” training data that the

minor constraint of “make it work on Cloudflare Workers” is a small tweak. It

is not fully dispositive of the question of the viability of “genAI coding”.

But it is a data point related to that question, and thus it did make me

contend with what might happen if it were actually a fully demonstrative

example. I reviewed the commit history, as the author suggested. For the sake

of argument, I tried to ask myself if I would like working this way. Just for

clarity on this question, I wanted to suspend judgement about everything else;

assuming:

- the model could be created with ethically, legally, voluntarily sourced

training data

- its usage involved consent from labor rather than authoritarian mandates

- sensible levels of energy expenditure, with minimal CO2 impact

- it is substantially more efficient to work this way than to just write the

code yourself

and so on, and so on… would I like to use this magic robot that could mostly

just emit working code for me? Would I use it if it were free, in all senses

of the word?

No. I absolutely would not.

I found the experience of reading this commit history and imagining myself

using such a tool — without exaggeration — nauseating.

Unlike many programmers, I love

code review. I find that it is one of the best parts of the process of

programming. I can help people learn, and develop their skills, and learn

from them, and appreciate the decisions they made, develop an impression of a

fellow programmer’s style. It’s a great way to build a mutual theory of mind.

Of course, it can still be really annoying; people make mistakes, often can’t

see things I find obvious, and in particular when you’re reviewing a lot of

code from a lot of different people, you often end up having to repeat

explanations of the same mistakes. So I can see why many programmers,

particularly those more introverted than I am, hate it.

But, ultimately, when I review their code and work hard to provide clear and

actionable feedback, people learn and grow and it’s worth that investment in

inconvenience.

The process of coding with an “agentic” LLM appears to be the process of

carefully distilling all the worst parts of code review, and removing and

discarding all of its benefits.

The lazy, dumb, lying robot asshole keeps making the same mistakes over and

over again, never improving, never genuinely reacting, always obsequiously

pretending to take your feedback on board.

Even when it “does” actually “understand” and manages to load your instructions

into its context window, 200K tokens later it will slide cleanly out of its

memory and you will have to say it again.

All the while, it is attempting to trick you. It gets most things right, but

it consistently makes mistakes in the places that you are least likely to

notice. In places where a person wouldn’t make a mistake. Your brain keeps

trying to develop a theory of mind to predict its behavior but there’s no mind

there, so it always behaves infuriatingly randomly.

I don’t think I am the only one who feels this way.

The Affordances

Whatever our environments afford,

we tend to do more of. Whatever they resist, we tend to do less of. So in a

world where we were all writing all of our code and emails and blog posts and

texts to each other with LLMs, what do they afford that existing tools do not?

As a weirdo who enjoys code review, I also enjoy process engineering. The

central question of almost all process engineering is to continuously ask: how

shall we shape our tools, to better shape ourselves?

LLMs are an affordance for producing more text, faster. How is that going to

shape us?

Again arguing in the alternative here, assuming the text is free from errors

and hallucinations and whatever, it’s all correct and fit for purpose, that

means it reduces the pain of circumstances where you have to repeat yourself.

Less pain! Sounds great; I don’t like pain.

Every codebase has places where you need boilerplate. Every organization has

defects in its information architecture that require repetition of certain

information rather than a link back to the authoritative source of truth.

Often, these problems persist for a very long time, because it is difficult to

overcome the institutional inertia required to make real progress rather than

going along with the status quo. But this is often where the highest-value

projects can be found. Where there’s muck, there’s

brass.

The process-engineering function of an LLM, therefore, is to prevent

fundamental problems from ever getting fixed, to reward the rapid-fire

overwhelm of infrastructure teams with an immediate, catastrophic cascade of

legacy code that is now much harder to delete than it is to write.

There is a scene in Game of Thrones where Khal Drogo kills himself. He does so

by replacing a stinging, burning, therapeutic antiseptic wound dressing with

some cool, soothing mud. The mud felt nice, addressed the immediate pain,

removed the discomfort of the antiseptic, and immediately gave him a lethal

infection.

The pleasing feeling of immediate progress when one prompts an LLM to solve

some problem feels like cool mud on my brain.

The Economics

We are in the middle of a mania around

this technology. As I have written about before, I believe the mania will end.

There will then be a crash, and a “winter”. But, as I may not have stressed

sufficiently, this crash will be the biggest of its kind — so big, that it is

arguably not of a kind at all. The level of investment in these technologies

is bananas and the possibility that the investors will recoup their

investment seems close to zero. Meanwhile, that cost keeps going up, and up,

and up.

Others have reported on this in detail, and I will not reiterate that all

here, but in addition to being a looming and scary industry-wide (if we are

lucky; more likely it’s probably “world-wide”) economic threat, it is also

going to drive some panicked behavior from management.

Panicky behavior from management

stressed that their idea is not panning out is, famously, the cause of much

human misery. I expect that even in the “good” scenario, where some profit

is ultimately achieved, will still involve mass layoffs rocking the industry,

panicked re-hiring, destruction of large amounts of wealth.

It feels bad to think about this.

The Energy Usage

For a long time I believed that the energy impact was overstated. I am even on

record, about a year ago,

saying I didn’t think the energy usage was a big deal. I think I was wrong

about that.

It initially seemed like it was letting regular old data centers off the hook.

But recently I have learned that, while the numbers are incomplete because the

vendors aren’t sharing information, they’re also extremely bad.

I think there’s probably a version of this technology that isn’t a climate

emergency nightmare, but that’s not the version that the general public has

access to today.

The Educational Impact

LLMs are making academic cheating incredibly rampant.

Not only is it so common as to be nearly universal, it’s also extremely harmful

to learning.

For learning, genAI is a forklift at the

gym.

To some extent, LLMs are simply revealing a structural rot within education and

academia that has been building for decades if not centuries. But it was

within those inefficiencies and the inconveniences of the academic experience

that real learning was, against all odds, still happening in schools.

LLMs produce a frictionless, streamlined process where students can

effortlessly glide through the entire credential, learning nothing. Once

again, they dull the pain without regard to its cause.

This is not good.

The Invasion of Privacy

This is obviously only a problem with the big cloud models, but then, the big

cloud models are the only ones that people actually use. If you are having

conversations about anything private with ChatGPT, you are sending all of that

private information directly to Sam Altman, to do with as he wishes.

Even if you don’t think he is a particularly bad guy, maybe he won’t even

create the privacy nightmare on purpose. Maybe he will be forced to do so as a

result of some bizarre kafkaesque accident.

Imagine the scenario, for example, where a woman is tracking her cycle and

uploading the logs to ChatGPT so she can chat with it about a health concern.

Except, surprise, you don’t have to imagine, you can just search for it, as I

have personally, organically, seen three separate women on YouTube, at least

one of whom lives in Texas, not only do this on camera but recommend doing

this to their audiences.

Citation links withheld on this particular claim for hopefully obvious reasons.

I assure you that I am neither particularly interested in menstrual products

nor genAI content, and if I am seeing this more than once, it is probably a

distressingly large trend.

The Stealing

The training data for LLMs is stolen. I don’t mean like “pirated” in the sense

where someone illicitly shares a copy they obtained legitimately; I mean their

scrapers are ignoring both norms and laws to obtain copies under false

pretenses, destroying other people’s infrastructure in the process.

The Fatigue

I have provided references to numerous articles outlining rhetorical and

sometimes data-driven cases for the existence of certain properties and

consequences of genAI tools. But I can’t prove any of these properties,

either at a point in time or as a durable ongoing problem.

The LLMs themselves are simply too large to model with the usual kind of

heuristics one would use to think about software. I’d sooner be able to

predict the physics of dice in a casino than a 2 trillion parameter neural

network. They resist scientific understanding, not just because of their size

and complexity, but because unlike a natural phenomenon (which could of course

be considerably larger and more complex) they resist experimentation.

The first form of genAI resistance to experiment is that every discussion is a

motte-and-bailey. If

I use a free model and get a bad result I’m told it’s because I should have

used the paid model. If I get a bad result with ChatGPT I should have used

Claude. If I get a bad result with a chatbot I need to start using an agentic

tool. If an agentic tool deletes my hard drive by putting os.system(“rm -rf

~/”) into sitecustomize.py then I guess I should have built my own MCP

integration with a completely novel heretofore never even considered security

sandbox or something?

What configuration, exactly, would let me make a categorical claim about these

things? What specific methodological approach should I stick to, to get

reliably adequate prompts?

For the record though, if the idea of the free models is that they are going to

be provocative demonstrations of the impressive capabilities of the commercial

models, and the results are consistently dogshit, I am finding it increasingly

hard to care how much

better the paid ones are supposed to be, especially since the “better”-ness

cannot really be quantified in any meaningful way.

The motte-and-bailey doesn’t stop there though. It’s a war on all fronts.

Concerned about energy usage? That’s OK, you can use a local model. Concerned

about infringement? That’s okay, somewhere, somebody, maybe, has figured out

how to train models consensually. Worried about the politics of enriching

the richest monsters in the world? Don’t worry, you can always download an

“open source” model from Hugging Face. It doesn’t matter that many of these

properties are mutually exclusive and attempting to fix one breaks two others;

there’s always an answer, the field is so abuzz with so many people trying to

pull in so many directions at once that it is legitimately difficult to

understand what’s going on.

Even here though, I can see that characterizing everything this way is unfair

to a hypothetical sort of person. If there is someone working at one of these

thousands of AI companies that have been springing up like toadstools after a

rain, and they really are solving one of these extremely difficult problems,

how can I handwave that away? We need people working on problems, that’s like,

the whole point of having an economy. And I really don’t like shitting on

other people’s earnest efforts, so I try not to dismiss whole fields. Given

how AI has gotten into everything, in a way that e.g. cryptocurrency never

did, painting with that broad a brush inevitably ends up tarring a bunch of

stuff that isn’t even really AI at all.

The second form of genAI resistance to experiment is the inherent obfuscation

of productization. The models themselves are already complicated enough, but

the products that are built around the models are evolving extremely rapidly.

ChatGPT is not just a “model”, and with the rapid deployment of Model

Context Protocol tools, the edges of all these things will blur even further.

Every LLM is now just an enormous unbounded soup of arbitrary software doing

arbitrary whatever. How could I possibly get my arms around that to understand

it?

The Challenge

I have woefully little experience with these tools.

I’ve tried them out a little bit, and almost every single time the result has

been a disaster that has not made me curious to push further. Yet, I keep

hearing from all over the industry that I should.

To some extent, I feel like the motte-and-bailey characterization above is

fair; if the technology itself can really do real software development, it

ought to be able to do it in multiple modalities, and there’s nothing anyone

can articulate to me about GPT-4o which puts it in a fundamentally different

class than GPT-3.5.

But, also, I consistently hear that the subjective experience of using the

premium versions of the tools is actually good, and the free ones are actually

bad.

I keep struggling to find ways to try them “the right way”, the way that people

I know and otherwise respect claim to be using them, but I haven’t managed to

do so in any meaningful way yet.

I do not want to be using the cloud versions of these models with their

potentially hideous energy demands; I’d like to use a local model. But there

is obviously not a nicely composed way to use local models like this.

Since there are apparently zero models with ethically-sourced training data,

and litigation is ongoing to determine the legal relationships of training

data and outputs, even if I can be comfortable with some level of plagiarism on

a project, I don’t feel that I can introduce the existential legal risk into

other people’s infrastructure, so I would need

to make a new project.

Others have differing opinions of course, including some within my dependency

chain, which does worry me, but I still don’t feel like I can freely contribute

further to the problem; it’s going to be bad enough to unwind any impact

upstream. Even just for my own sake, I don’t want to make it worse.

This especially presents a problem because I have way too much stuff going

on already. A new project

is not practical.

Finally, even if I did manage to satisfy all of my quirky constraints,

would this experiment really be worth anything? The models and tools that

people are raving about are the big, expensive, harmful ones. If I proved to

myself yet again that a small model with bad tools was unpleasant to use, I

wouldn’t really be addressing my opponents’ views.

I’m stuck.

The Surrender

I am writing this piece to make my peace with giving up on this topic, at

least for a while. While I do idly hope that some folks might find bits of it

convincing, and perhaps find ways to be more mindful with their own usage of

genAI tools, and consider the harm they may be causing, that’s not actually the

goal. And that is not the goal because it is just so much goddamn work to

prove.

Here, I must return to my philosophical hobbyhorse of

sprachspiel. In

this case, specifically to use it as an analytical tool, not just to understand

what I am trying to say, but what the purpose for my speech is.

The concept of sprachspiel is most frequently deployed to describe the goal

of the language game being played, but in game theory, that’s only half the

story. Speech — particularly rigorously justified speech — has a cost, as

well as a benefit. I can make shit up pretty easily, but if I want to do

anything remotely like scientific or academic rigor, that cost can be

astronomical. In the case of developing an abstract understanding of LLMs, the

cost is just too high.

So what is my goal, then? To be king Canute, standing astride the shore of

“tech”, whatever that is, commanding the LLM tide not to rise? This is a

multi-trillion dollar juggernaut.

Even the rump, loser, also-ran fragment of it has the power to literally

suffocate us in our homes if they so choose, completely insulated from any

consequence. If the power curve starts there, imagine what the winners in

this industry are going to be capable of, irrespective of the technology

they’re building - just with the resources they have to hand. Am I going to

write a blog post that can rival their propaganda apparatus? Doubtful.

Instead, I will just have to concede that maybe I’m wrong. I don’t have the

skill, or the knowledge, or the energy, to demonstrate with any level of rigor

that LLMs are generally, in fact, hot garbage. Intellectually, I will have to

acknowledge that maybe the boosters are right. Maybe it’ll be OK.

Maybe the carbon emissions aren’t so bad. Maybe everybody is keeping them

secret in ways that they don’t for other types of datacenter for perfectly

legitimate reasons. Maybe the tools really can write novel and correct code,

and with a little more tweaking, it won’t be so difficult to get them to do it.

Maybe by the time they become a mandatory condition of access to developer

tools, they won’t be miserable.

Sure, I even sincerely agree, intellectual property really has been a pretty

bad idea from the beginning. Maybe it’s OK that we’ve made an exception to

those rules. The rules were stupid anyway, so what does it matter if we let a

few billionaires break them? Really, everybody should be able to break them

(although of course, regular people can’t, because we can’t afford the lawyers

to fight off the MPAA and RIAA, but that’s a problem with the legal system, not

tech).

I come not to praise “AI skepticism”, but to bury it.

Maybe it really is all going to be fine. Perhaps I am simply catastrophizing;

I have been known to do that from time to time. I can even sort of believe it,

in my head. Still, even after writing all this out, I can’t quite manage to

believe it in the pit of my stomach.

Unfortunately, that feeling is not something that you, or I, can argue with.

Acknowledgments

Thank you to my patrons. Normally, I would say, “who are

supporting my writing on this blog”, but in the case of this piece, I feel more

like I should apologize to them for this than to thank them; these thoughts

have been preventing me from thinking more productive, useful things that I

actually have relevant skill and expertise in; this felt more like a creative

blockage that I just needed to expel than a deliberately written article. If

you like what you’ve read here and you’d like to read more of it, well, too

bad; I am sincerely determined to stop writing about this topic. But, if

you’d like to read more stuff like other things I have written, or you’d like

to support my various open-source endeavors, you

can support my work as a sponsor!