by Donovan Preston (noreply@blogger.com) at February 19, 2026 02:36 AM

January 25, 2024

Glyph Lefkowitz

The Macintosh

Today is the 40th anniversary of the announcement of the Macintosh. Others have articulated compelling emotional narratives that easily eclipse my own similar childhood memories of the Macintosh family of computers. So instead, I will ask a question:

What is the Macintosh?

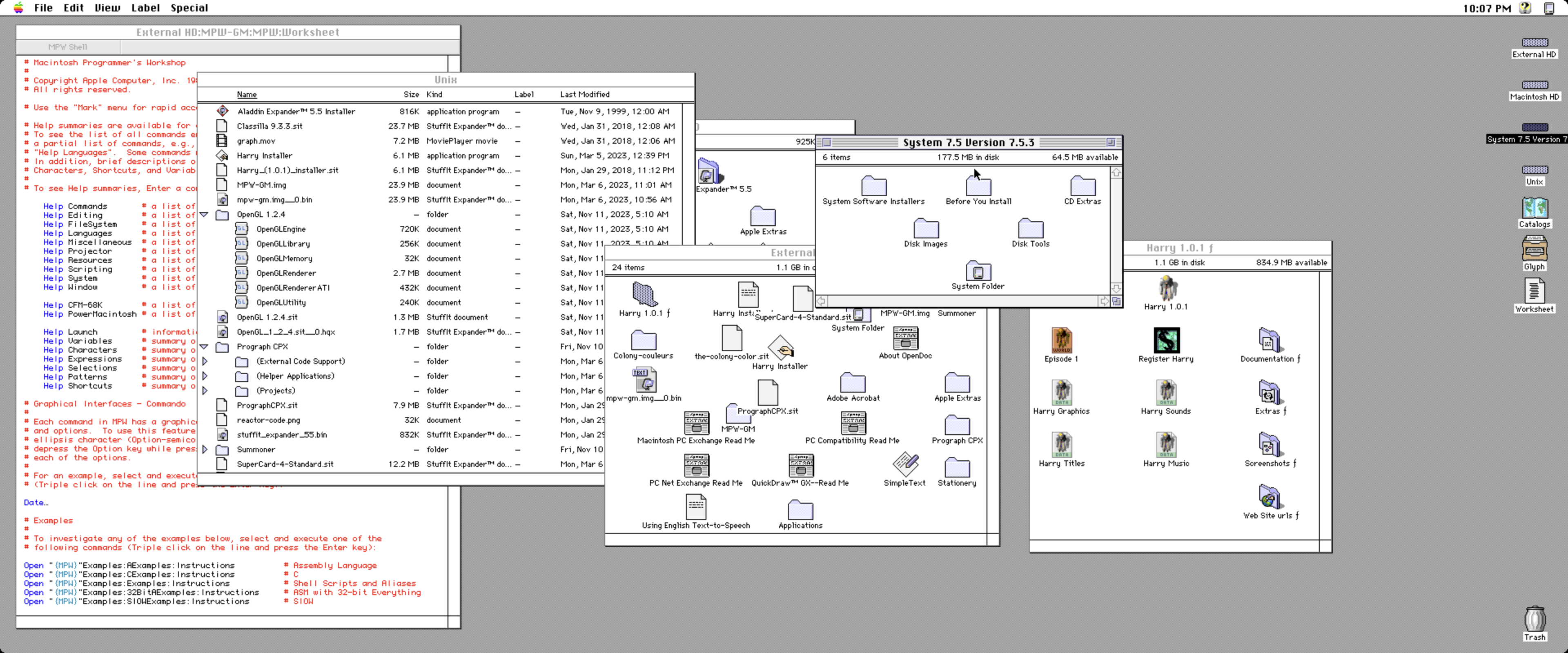

As this is the anniversary of the beginning, that is where I will begin. The original Macintosh, the classic MacOS, the original “System Software” are a shining example of “fake it till you make it”. The original mac operating system was fake.

Don’t get me wrong, it was an impressive technical achievement to fake something like this, but what Steve Jobs did was to see a demo of a Smalltalk-76 system, an object-oriented programming environment with 1-to-1 correspondences between graphical objects on screen and runtime-introspectable data structures, a self-hosting high level programming language, memory safety, message passing, garbage collection, and many other advanced facilities that would not be popularized for decades, and make a fake version of it which ran on hardware that consumers could actually afford, by throwing out most of what made the programming environment interesting and replacing it with a much more memory-efficient illusion implemented in 68000 assembler and Pascal.

The machine’s RAM didn’t have room for a kernel. Whatever application was running was in control of the whole system. No protected memory, no preemptive multitasking. It was a house of cards that was destined to collapse. And collapse it did, both in the short term and the long. In the short term, the system was buggy and unstable, and application crashes resulted in system halts and reboots.

In the longer term, the company based on the Macintosh effectively went out of business and was reverse-acquired by NeXT, but they kept the better-known branding of the older company. The old operating system was gradually disposed of, quickly replaced at its core with a significantly more mature generation of operating system technology based on BSD UNIX and Mach. With the removal of Carbon compatibility 4 years ago, the last vestigial traces of it were removed. But even as early as 2004 the Mac was no longer really the Macintosh.

What NeXT had built was much closer to the Smalltalk system that Jobs was originally attempting to emulate. Its programming language, “Objective C” explicitly called back to Smalltalk’s message-passing, right down to the syntax. Objects on the screen now did correspond to “objects” you could send messages to. The development environment understood this too; that was a major selling point.

The NeXSTEP operating system and Objective C runtime did not have garbage collection, but it provided a similar developer experience by providing reference-counting throughout its object model. The original vision was finally achieved, for real, and that’s what we have on our desks and in our backpacks today (and in our pockets, in the form of the iPhone, which is in some sense a tiny next-generation NeXT computer itself).

The one detail I will relate from my own childhood is this: my first computer was not a Mac. My first computer, as a child, was an Amiga. When I was 5, I had a computer with 4096 colors, real multitasking, 3D graphics, and a paint program that could draw hard real-time animations with palette tricks. Then the writing was on the wall for Commodore and I got a computer which had 256 colors, a bunch of old software that was still black and white, an operating system that would freeze if you held down the mouse button on the menu bar and couldn’t even play animations smoothly. Many will relay their first encounter with the Mac as a kind of magic, but mine was a feeling of loss and disappointment. Unlike almost everyone at the time, I knew what a computer really could be, and despite many pleasant and formative experiences with the Macintosh in the meanwhile, it would be a decade before I saw a real one again.

But this is not to deride the faking. The faking was necessary. Xerox was not going to put an Alto running Smalltalk on anyone’s desk. People have always grumbled that Apple products are expensive, but in 2024 dollars, one of these Xerox computers cost roughly $55,000.

The Amiga was, in its own way, a similar sort of fake. It managed its own miracles by putting performance-critical functions into dedicated hardware which rapidly became obsolete as software technology evolved much more rapidly.

Jobs is celebrated as a genius of product design, and he certainly wasn’t bad at it, but I had the rare privilege of seeing the homework he was cribbing from in that subject, and in my estimation he was a B student at best. Where he got an A was bringing a vision to life by creating an organization, both inside and outside of his companies.

If you want a culture-defining technological artifact, everybody in the culture has to be able to get their hands on one. This doesn’t just mean that the builder has to be able to build it. The buyer also has to be able to afford it, obviously. Developers have to be able to develop for it. The buyer has to actually want it; the much-derided “marketing” is a necessary part of the process of making a product what it is. Everyone needs to be able to move together in the direction of the same technological future.

This is why it was so fitting that Tim Cook was made Jobs's successor. The supply chain was the hard part.

The crowning, final achievement of Jobs’s career was the fact that not only did he fake it — the fakes were flying fast and thick at that time in history, even if they mostly weren’t as good — it was that he faked it and then he built the real version and then he bridged the transitions to get to the real thing.

I began here by saying that the Mac isn’t really the Mac, and speaking in terms of a point in time analysis that is true. Its technology today has practically nothing in common with its technology in 1984. This is not merely an artifact of the length of time here: the technology at the core of various UNIXes in 1984 bears a lot of resemblance of UNIX-like operating systems today1. But looking across its whole history from 1984 to 2024, there is undeniably a continuity to the conceptual “Macintosh”.

Not just as a user, but as a developer moving through time rather than looking at just a few points: the “Macintosh”, such as it is, has transitioned from the Motorola 68000 to the PowerPC to Intel 32-bit to Intel 64-bit to ARM. From obscurely proprietary to enthusiastically embracing open source and then, sadly, much of the way back again. It moved from black and white to color, from desktop to laptop, from Carbon to Cocoa, from Display PostScript to Display PDF, all the while preserving instantly recognizable iconic features like the apple menu and the cursor pointer, while providing developers documentation and SDKs and training sessions that helped them transition their apps through multiple near-complete rewrites as a result of all of these changes.

To paraphrase Abigail Thorne’s first video about Identity, identity is what survives. The Macintosh is an interesting case study in the survival of the idea of a platform, as distinct from the platform itself. It is the Computer of Theseus, a thought experiment successfully brought to life and sustained over time.

If there is a personal lesson to be learned here, I’d say it’s that one’s own efforts need not be perfect. In fact, a significantly flawed vision that you can achieve right now is often much, much better than a perfect version that might take just a little bit longer, if you don’t have the resources to actually sustain going that much longer2. You have to be bad at things before you can be good at them. Real artists, as Jobs famously put it, ship.

So my contribution to the 40th anniversary reflections is to say: the Macintosh is dead. Long live the Mac.

Acknowledgments

Thank you to my patrons who are supporting my writing on this blog. If you like what you’ve read here and you’d like to read more of it, or you’d like to support my various open-source endeavors, you can support my work as a sponsor!

Unsigned Commits

I am going to tell you why I don’t think you should sign your Git commits, even though doing so with SSH keys is now easier than ever. But first, to contextualize my objection, I have a brief hypothetical for you, and then a bit of history from the evolution of security on the web.

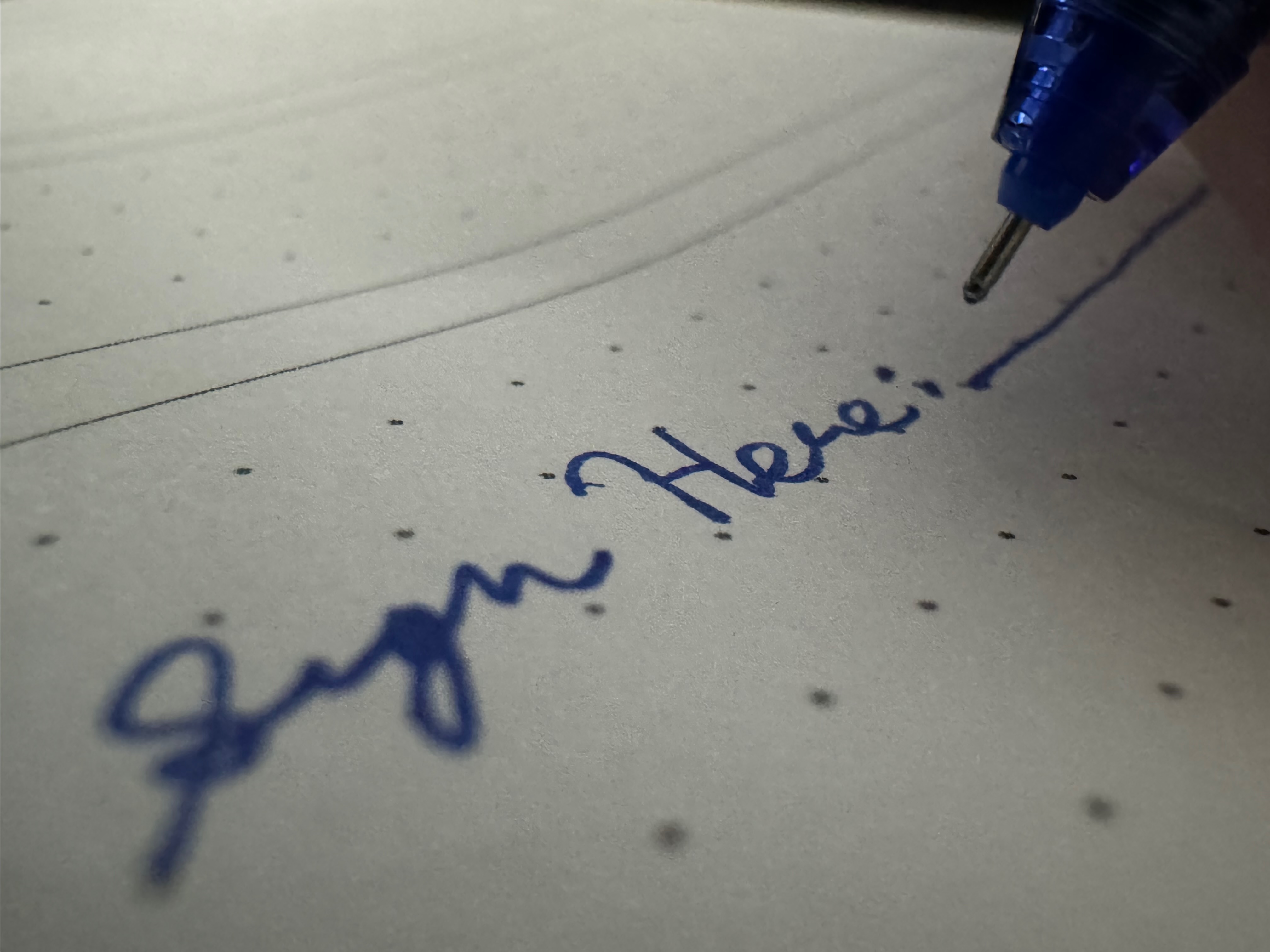

It seems like these days, everybody’s signing all different kinds of papers.

Bank forms, permission slips, power of attorney; it seems like if you want to securely validate a document, you’ve gotta sign it.

So I have invented a machine that automatically signs every document on your desk, just in case it needs your signature. Signing is good for security, so you should probably get one, and turn it on, just in case something needs your signature on it.

We also want to make sure that verifying your signature is easy, so we will have them all notarized and duplicates stored permanently and publicly for future reference.

No? Not interested?

Hopefully, that sounded like a silly idea to you.

Most adults in modern civilization have learned that signing your name to a document has an effect. It is not merely decorative; the words in the document being signed have some specific meaning and can be enforced against you.

In some ways the metaphor of “signing” in cryptography is bad. One does not “sign” things with “keys” in real life. But here, it is spot on: a cryptographic signature can have an effect.

It should be an input to some software, one that is acted upon. Software does a thing differently depending on the presence or absence of a signature. If it doesn’t, the signature probably shouldn’t be there.

Consider the most venerable example of encryption and signing that we all deal with every day: HTTPS. Many years ago, browsers would happily display unencrypted web pages. The browser would also encrypt the connection, if the server operator had paid for an expensive certificate and correctly configured their server. If that operator messed up the encryption, it would pop up a helpful dialog box that would tell the user “This website did something wrong that you cannot possibly understand. Would you like to ignore this and keep working?” with buttons that said “Yes” and “No”.

Of course, these are not the precise words that were written. The words, as written, said things about “information you exchange” and “security certificate” and “certifying authorities” but “Yes” and “No” were the words that most users read. Predictably, most users just clicked “Yes”.

In the usual case, where users ignored these warnings, it meant that no user ever got meaningful security from HTTPS. It was a component of the web stack that did nothing but funnel money into the pockets of certificate authorities and occasionally present annoying interruptions to users.

In the case where the user carefully read and honored these warnings in the spirit they were intended, adding any sort of transport security to your website was a potential liability. If you got everything perfectly correct, nothing happened except the browser would display a picture of a small green purse. If you made any small mistake, it would scare users off and thereby directly harm your business. You would only want to do it if you were doing something that put a big enough target on your site that you became unusually interesting to attackers, or were required to do so by some contractual obligation like credit card companies.

Keep in mind that the second case here is the best case.

In 2016, the browser makers noticed this problem and started taking some pretty aggressive steps towards actually enforcing the security that HTTPS was supposed to provide, by fixing the user interface to do the right thing. If your site didn’t have security, it would be shown as “Not Secure”, a subtle warning that would gradually escalate in intensity as time went on, correctly incentivizing site operators to adopt transport security certificates. On the user interface side, certificate errors would be significantly harder to disregard, making it so that users who didn’t understand what they were seeing would actually be stopped from doing the dangerous thing.

Nothing fundamental1 changed about the technical aspects of the cryptographic primitives or constructions being used by HTTPS in this time period, but socially, the meaning of an HTTP server signing and encrypting its requests changed a lot.

Now, let’s consider signing Git commits.

You may have heard that in some abstract sense you “should” be signing your commits. GitHub puts a little green “verified” badge next to commits that are signed, which is neat, I guess. They provide “security”. 1Password provides a nice UI for setting it up. If you’re not a 1Password user, GitHub itself recommends you put in just a few lines of configuration to do it with either a GPG, SSH, or even an S/MIME key.

But while GitHub’s documentation quite lucidly tells you how to sign your commits, its explanation of why is somewhat less clear. Their purse is the word “Verified”; it’s still green. If you enable “vigilant mode”, you can make the blank “no verification status” option say “Unverified”, but not much else changes.

This is like the old-style HTTPS verification “Yes”/“No” dialog, except that there is not even an interruption to your workflow. They might put the “Unverified” status on there, but they’ve gone ahead and clicked “Yes” for you.

It is tempting to think that the “HTTPS” metaphor will map neatly onto Git commit signatures. It was bad when the web wasn’t using HTTPS, and the next step in that process was for Let’s Encrypt to come along and for the browsers to fix their implementations. Getting your certificates properly set up in the meanwhile and becoming familiar with the tools for properly doing HTTPS was unambiguously a good thing for an engineer to do. I did, and I’m quite glad I did so!

However, there is a significant difference: signing and encrypting an HTTPS request is ephemeral; signing a Git commit is functionally permanent.

This ephemeral nature meant that errors in the early HTTPS landscape were easily fixable. Earlier I mentioned that there was a time where you might not want to set up HTTPS on your production web servers, because any small screw-up would break your site and thereby your business. But if you were really skilled and you could see the future coming, you could set up monitoring, avoid these mistakes, and rapidly recover. These mistakes didn’t need to badly break your site.

We can extend the analogy to HTTPS, but we have to take a detour into one of the more unpleasant mistakes in HTTPS’s history: HTTP Public Key Pinning, or “HPKP”. The idea with HPKP was that you could publish a record in an HTTP header where your site commits2 to using certain certificate authorities for a period of time, where that period of time could be “forever”. Attackers gonna attack, and attack they did. Even without getting attacked, a site could easily commit “HPKP Suicide” where they would pin the wrong certificate authority with a long timeline, and their site was effectively gone for every browser that had ever seen those pins. As a result, after a few years, HPKP was completely removed from all browsers.

Git commit signing is even worse. With HPKP, you could easily make terrible mistakes with permanent consequences even though you knew the exact meaning of the data you were putting into the system at the time you were doing it. With signed commits, you are saying something permanently, but you don’t really know what it is that you’re saying.

Today, what is the benefit of signing a Git commit? GitHub might present it as “Verified”. It’s worth noting that only GitHub will do this, since they are the root of trust for this signing scheme. So, by signing commits and registering your keys with GitHub, you are, at best, helping to lock in GitHub as a permanent piece of infrastructure that is even harder to dislodge because they are not only where your code is stored, but also the arbiters of whether or not it is trustworthy.

In the future, what is the possible security benefit? If we all collectively decide we want Git to be more secure, then we will need to meaningfully treat signed commits differently from unsigned ones.

There’s a long tail of unsigned commits several billion entries long. And those are in the permanent record as much as the signed ones are, so future tooling will have to be able to deal with them. If, as stewards of Git, we wish to move towards a more secure Git, as the stewards of the web moved towards a more secure web, we do not have the option that the web did. In the browser, the meaning of a plain-text HTTP or incorrectly-signed HTTPS site changed, in order to encourage the site’s operator to change the site to be HTTPS.

In contrast, the meaning of an unsigned commit cannot change, because there are zillions of unsigned commits lying around in critical infrastructure and we need them to remain there. Commits cannot meaningfully be changed to become signed retroactively. Unlike an online website, they are part of a historical record, not an operating program. So we cannot establish the difference in treatment by changing how unsigned commits are treated.

That means that tooling maintainers will need to provide some difference in behavior that provides some incentive. With HTTPS where the binary choice was clear: don’t present sites with incorrect, potentially compromised configurations to users. The question was just how to achieve that. With Git commits, the difference in treatment of a “trusted” commit is far less clear.

If you will forgive me a slight straw-man here, one possible naive interpretation is that a “trusted” signed commit is that it’s OK to run in CI. Conveniently, it’s not simply “trusted” in a general sense. If you signed it, it’s trusted to be from you, specifically. Surely it’s fine if we bill the CI costs for validating the PR that includes that signed commit to your GitHub account?

Now, someone can piggy-back off a 1-line typo fix that you made on top of an unsigned commit to some large repo, making you implicitly responsible for transitively signing all unsigned parent commits, even though you haven’t looked at any of the code.

Remember, also, that the only central authority that is practically trustable at this point is your GitHub account. That means that if you are using a third-party CI system, even if you’re using a third-party Git host, you can only run “trusted” code if GitHub is online and responding to requests for its “get me the trusted signing keys for this user” API. This also adds a lot of value to a GitHub credential breach, strongly motivating attackers to sneakily attach their own keys to your account so that their commits in unrelated repos can be “Verified” by you.

Let’s review the pros and cons of turning on commit signing now, before you know what it is going to be used for:

| Pro | Con |

|---|---|

| Green “Verified” badge | Unknown, possibly unlimited future liability for the consequences of running code in a commit you signed |

| Further implicitly cementing GitHub as a centralized trust authority in the open source world | |

| Introducing unknown reliability problems into infrastructure that relies on commit signatures | |

| Temporary breach of your GitHub credentials now lead to potentially permanent consequences if someone can smuggle a new trusted key in there | |

| New kinds of ongoing process overhead as commit-signing keys become new permanent load-bearing infrastructure, like “what do I do with expired keys”, “how often should I rotate these”, and so on |

I feel like the “Con” column is coming out ahead.

That probably seemed like increasingly unhinged hyperbole, and it was.

In reality, the consequences are unlikely to be nearly so dramatic. The status quo has a very high amount of inertia, and probably the “Verified” badge will remain the only visible difference, except for a few repo-specific esoteric workflows, like pushing trust verification into offline or sandboxed build systems. I do still think that there is some potential for nefariousness around the “unknown and unlimited” dimension of any future plans that might rely on verifying signed commits, but any flaws are likely to be subtle attack chains and not anything flashy and obvious.

But I think that one of the biggest problems in information security is a lack of threat modeling. We encrypt things, we sign things, we institute rotation policies and elaborate useless rules for passwords, because we are looking for a “best practice” that is going to save us from having to think about what our actual security problems are.

I think the actual harm of signing git commits is to perpetuate an engineering culture of unquestioningly cargo-culting sophisticated and complex tools like cryptographic signatures into new contexts where they have no use.

Just from a baseline utilitarian philosophical perspective, for a given action A, all else being equal, it’s always better not to do A, because taking an action always has some non-zero opportunity cost even if it is just the time taken to do it. Epsilon cost and zero benefit is still a net harm. This is even more true in the context of a complex system. Any action taken in response to a rule in a system is going to interact with all the other rules in that system. You have to pay complexity-rent on every new rule. So an apparently-useless embellishment like signing commits can have potentially far-reaching consequences in the future.

Git commit signing itself is not particularly consequential. I have probably spent more time writing this blog post than the sum total of all the time wasted by all programmers configuring their git clients to add useless signatures; even the relatively modest readership of this blog will likely transfer more data reading this post than all those signatures will take to transmit to the various git clients that will read them. If I just convince you not to sign your commits, I don’t think I’m coming out ahead in the felicific calculus here.

What I am actually trying to point out here is that it is useful to carefully consider how to avoid adding junk complexity to your systems. One area where junk tends to leak in to designs and to cultures particularly easily is in intimidating subjects like trust and safety, where it is easy to get anxious and convince ourselves that piling on more stuff is safer than leaving things simple.

If I can help you avoid adding even a little bit of unnecessary complexity, I think it will have been well worth the cost of the writing, and the reading.

Acknowledgments

Thank you to my patrons who are supporting my writing on this blog. If you like what you’ve read here and you’d like to read more of it, or you’d like to support my various open-source endeavors, you can support my work as a sponsor! I am also available for consulting work if you think your organization could benefit from expertise on topics such as “What else should I not apply a cryptographic signature to?”.

-

Yes yes I know about heartbleed and Bleichenbacher attacks and adoption of forward-secret ciphers and CRIME and BREACH and none of that is relevant here, okay? Jeez. ↩

-

Do you see what I did there. ↩